I thought I shared this a while ago.

So I have new corporate overlords as my firm was procured by a larger fish. Their ConfigMgr environment was still 2012 R2 and 2008 R2 Windows Server so one of my projects was to determine path forward for SCCM and a pre-req was to get them to Current Branch. This was around the 1710 version timeframe. We had to choose to upgrade ConfigMgr then the server OS or Servers first then ConfigMgr. While I had 16xx Current Branch media around for the former, we elected to move all the roles to Windows Server 2012 R2 and then once all were done we would upgrade Configuration Manager itself. Sure I'll have notes around that.

First up was the SUP/WSUS environment for which there were several servers. Below are random notes, not a howto, from our experience of completely removing SUP and rebuilding from nothing on new 2012 R2 servers as well as preparing them for new features in Current Branch. I would expect some of these to be resolved in newer Server OS and/or ConfigMgr.

Before uninstall:

- When you make note of current SUP settings such as products and classifications be sure to clear out the products and classifications in the SUP role. Leaving them will cause SUP to not sync from WSUS until you do a clear, sync, then setup again, and sync.

- Goto HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Update Services\Server\Setup and copy SQLServerName REG_EXPAND_SZ so you have the current SQL server and instance. Useful if its on the primary site SQL server.

- Backup the SUSDB just in case

- On the SUP server, delete SUPSetup.LOG and WSUSCTRL.LOG from COnfigMgr Log dir so you have clean logs to start with. This was more around the first bullet as we had to remove to nothing again and start over with empty settings.

- When you uninstall the WSUS role, also uninstall WID role on the server if installed.

- Delete SUSDB from the SQL server as new server version uses new WSUS database version. We were going from 2008R2 to 2012R2 WSUS.

- During install of WSUS role it will enable WID even if you select SQL server. On Feature dialog uncheck it if you are using full SQL. Otherwise uninstall after WSUS installed. We put on Primary site DB since it is supported as well as us using the Enterprise Edition of SQL.

- If using a Shared DB enter the path to the root share, ie \\servername.domain.com\WSUS. It will add the WSUSContent folder onto that. We put it on the first installed WSUS/SUP server.

- For the other servers give their machineobject full rights to the shared WSUSContent folder for the filesystem and/or share.

- After install of WSUS role go to HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Update Services\Server\Setup and modify SQLServerName to SQL server/instance as it defaults to WID (MICROSOFT##WID) even if you select database to use full SQL. Do this before you start the configure task in Server Manager otherwise it will fail out. Good idea to modify to FQDN if not using it.

- After configuration task goto SSMS if using full SQL and configure

- The account you installed with is the owner of SUSDB, change to sa

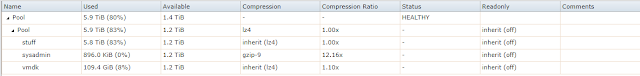

- set database file to 100MB growth unlimited for DB and 50MB growth max 10GB for logs. Should plan on approx 20GB WSUS, but that is pure WSUS.

- After inititial setup in Roles, go into WSUS console to Options | Update Files and Languages | Update Languages and match what was chosen in SUP setup such as EN only. It will be set to Download all languages. SUP did not change this when it was configured later.

- in IIS manager | Application Pools | WsusPool | Advanced Settings

- Private Memory Limit = 0

- Queue Length = 25000

- For Database sharing you need to configure all servers to also share the ContentDir. In IIS Manager | Server Name | WSUS Administration | Content | Manage Virtual Directory | Physical Path. Be sure to use FQDN here. It forgets the slashes.

Post Install

We did not have this problem but expected it due to how long the existing SUP/WSUS was in place. You might have issue with catalog version.

- http://rzander.azurewebsites.net/query-to-get-mincatalogversion-from-sccm-updates/

- https://windowsbag.wordpress.com/2017/05/15/how-to-install-sup-with-wsus-step-by-step/

- https://social.technet.microsoft.com/Forums/en-US/00ca5b73-5972-436d-b084-046a3602b931/sup-role-reinstall-after-2012-r2-sp1-upgrade-fails-failed-to-get-installation-root-path-for-net?forum=configmanagergeneral

- select * from [SUSDB].[dbo].[tbConfigurationB]

%windir%\system32\inetsrv\appcmd.exe set config -section:system.webServer/httpCompression /-[name='xpress']

-Kevin