As we all know, ConfigMgr OSD deployment process can sometimes be finicky. Start imaging two identical

computers and one might fail while the other is successful. There could be a number of reasons:

- DP replication issues

- Planets are not in alignment

- Network issues

- Someone cut the hard line.

- ???

Whatever

the cause, If the error is at the beginning of the task sequence there is no

major downtime but if the error is towards the end, then that 2-3 hour image deployment process could take 4-6 as they start it over again and for what, Adobe Reader

didn't install because someone or something updated the DP content?

Issue

We typically do not use the “Continue on error” flag in our

TS’ because if a particular step fails, it’s difficult for us to know about

that failure until the image is complete, or possibly deployed and someone goes

looking for that software. For example, if Adobe Reader fails, the entire TS errors out, the tech gets frustrated as they have

to start all over again. They notify the

support structure, we fight to get logs so we can figure out what happened, end

up in most cases having them try again and this time all is right and life moves on. I've always been of the mindset that if it failed, we should start it over as its not in a "clean" state when deployed. Going down this road below is a bit of a compromise with my techs. So how can we

consistently deploy a computer at the end of the imaging process that is as

complete as possible and if not, notifies the technician there are installs

still needed.

Resolution

The fix for this is quite simple if not laborious depending

on how far you take it.

Others have captured logs via the TS, however, it

opens up a world of possibilities for reporting and data gathering (logs! logs! logs!) as well as consistently

producing a computer that is usable by the tech even if there was a small error or

two. In VB programming terms it is

called a 'try…catch block'. You “try” a

particular command and if there is an error, the “Catch” step in this block

captures and acts on the error so the code can continue on.

To use task sequence terms, it is simply creating a parent group

folder for a step that has the 'continue on error' flag checked so that when the TS

checks with the parent folder after an error it continues on. The 'catch' is a new parent group folder as

the very next step which has a specific condition attached to it to catch the

error and perform an action.

When a TS step errors it sets an internal TS variable, '_SMSTSLastActionSucceeded' to false. The 'catch' group has a

condition set so if '_SMSTSLastActionSucceeded' = false it will run. In that group we have a step named 'Create

Log' that uses a script to capture all the logs from the current log path using

the internal '_SMSTSLogPath' variable and copies them to %OSDISK%:\OSDLogs (This variable is set via the 'Partition Disk' steps like MDT), so

if logs are needed from techs we don’t have to fight to find them since they move

around and each error gets its own timestamp named folder so if there are

multiple errors each error will generate its folder structure with the logs

relevant to that error. The last action

succeeded variable resets to “True” and the TS continues on yet the tech still needs to be notified.

Before a particular step runs I set a variable to something relevant to that step. If applying the base WIM it is set to “ApplyWIM”. Installing Adobe Reader is “AdobeReader”. Installing Windows Updates is “Updates”. You get the picture. Note these are shown during the dialog at the end

Use whatever makes sense to you and place that step in any location you might need to know about if a failure occurs. If a failure does occur,

the script used by the “Create Log” step reads that variable and writes the

step to a text file in the %OSDISK%\OSDLogs folder.

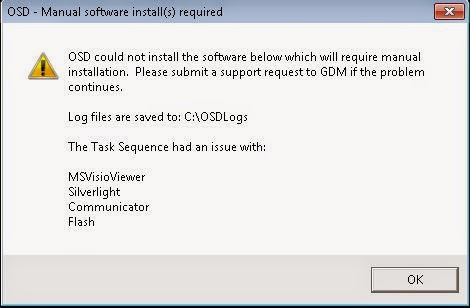

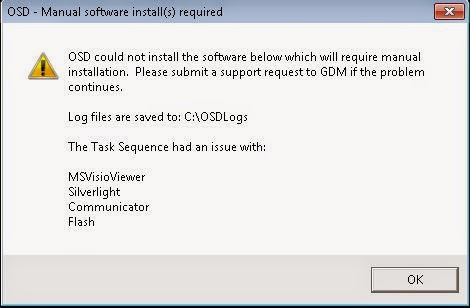

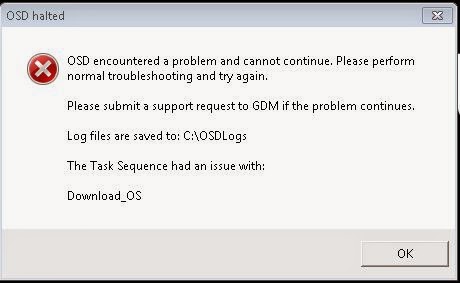

At the very end of the TS a VB script message box is displayed that

lists some basic instructions as well as the item(s) that failed (below). The tech can then install what was missed post Task Sequence.

Now there is a big difference between Adobe Reader not installing

and the OS WIM not being downloaded. Adobe Reader can be installed by the tech obviously, or even have the TS put this system in Collection to deal with it, however if the there is an

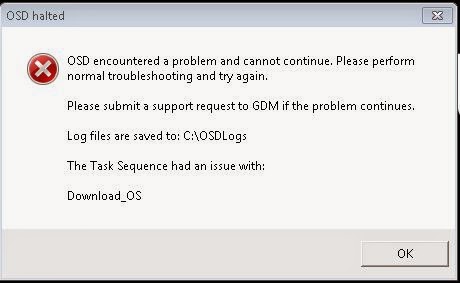

issue downloading the WIM, there is not much the tech can do at that point. To address this, I have two final message

boxes I use for “Major” and “Minor” failures.

Major failures are anything that can stop the image process or better

yet any step that if there was an error at we would not want or can’t deploy

the machine. Minor failures are items

that a tech should easily be able to resolve.

My “Major” message is below.

For the minor errors I want the TS to continue on to the

next step but for the major errors I want it to stop. For that reason in my TS I have no catch

group anywhere up until when my software starts to install. But I am still setting step variables so if

there is a major failure, I will know where.

The entire TS is enclosed in a try…catch block so if there is an error

it always falls back to the top level folder and continues on to the catch

group. I have steps to notify the techs

depending on the failure. Condition are

set so if the failure equals one of the major steps it runs the “Notify Tech –

Major failure” in the example above. Anything else it will run the minor.

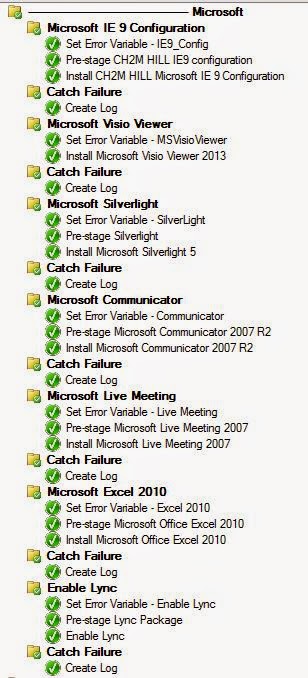

The TS here is an over simplified sample TS to show the

basic structure.

When I first started

experimenting, I placed the entire TS in a try…catch block and once I saw it

worked, started grouping software into try blocks as I wasn't sure if I would

hit a limit on the number of steps allowed in a TS. I had all MS software in one block, Adobe software in another but the problem with that was if a particular step failed,

it would stop processing everything else in that group. The catch afterward would catch the error and

proceed to the next group but any step after the failure in the “Install

Microsoft Software group” would not get run.

This is a sample of the MS section. You can ignore the 'pre-stage' steps as those are for 1E Nomad (posts coming soon!). So I set out on a journey to see if I could have a try…catch

block at every software install step that was important enough to catch. I wound up with a lengthy TS but so far it is

consistently doing what it was built for, producing an imaged computer at the

end that captures any errors and notifies the tech afterwards if there is a

failure.

The imaging time is not

increased due to a relatively minor failure to install a package freeing up

techs to do other things. If the image

is successful, the techs will notice nothing other than what they are used to,

a Ctrl+Alt+DEL login screen when complete.

After that endeavor, I started thinking of other

possibilities. The TS now compresses the %OSDISK%\OSDLogs folder and copies it to a network share. In the case of a failure, an email is sent to our support group Inbox advising of the failure so we can jump on the issue proactively. Depending on the step that failed I am

gathering additional logs that can help determine cause such as copying the

WindowsUpdate.log if there is a problem with installing any updates or the logs

from the %WINDIR%\Panther folder if there is a problem applying the OS. In the screenshots below, there were several items that failed and going into Reader you can see it grabbed all the relevant logs up to that point.

Since we are gathering data on failures visa-vie the error logs,

why not gather data on the successful images as well? To that end I am now gathering st

art and end times when an image is

successful so if we ever do want to see how long an image is taking we have the

data outside of reports. This can also be useful in tuning OSD or noticing that it takes 20% longer in office X vs Y.

And that is really what this is all about, gathering the

data you need at the time so you can have a baseline to improve processes, to

fix an issue expeditiously, even proactively before it blows up.

To go one step further you can reference

this post for simple cleanup of the log folders.

Download

This script is provided as-is, no warranty is provided or implied.The author is NOT responsible for any damages or data loss that may occur through the use of this script. Always test, test, test before rolling anything into a production environment.

You can find all the scripts referenced in this post as well as a sample TS (2012R2)

here. You will need to tweak them for your environment for things like variables and log paths. The scripts should get updated to be more friendly towards distribution.

-Kevin